You probably heard about how Amazon tried to use AI to screen job candidates but had to scrap the whole thing. The AI system was picking candidates based on patterns in old resumes, and since those resumes mostly came from men, the AI started rejecting women’s applications. This showed how AI, if not handled well, can make the hiring process unfair.

Bias in AI can cause big problems. It shakes trust between employers and employees. It can also lead to lawsuits or damage the company’s reputation. More so, it stops companies from having real diversity and fairness in their workforce. So, if you are working in HR, this is something you cannot ignore.

This article will explain what ethical AI in HR looks like in practical terms. It will discuss why fairness matters in 2025 and how businesses can use AI responsibly without cutting corners.

What Ethical AI in HR Means

Ethical AI in HR means using artificial intelligence in a way that treats everyone fairly, explains how decisions are made, and holds both the system and its users accountable. It is about ensuring that AI tools treat everyone equally and that the decisions made by these tools can be explained clearly. Instead of letting machines operate in secret, ethical AI promotes transparency, allowing HR teams and candidates to understand how choices are made.

There are a few key principles that guide ethical AI in HR:

- Fairness: AI should avoid favouring one group over another. It must give everyone a fair chance, regardless of gender, race, age, or background.

- Accountability: Someone must be responsible for how AI decisions affect people. HR teams need to check and correct AI errors or biases.

- Transparency: AI systems should clearly show how they reach conclusions. Hidden or “black-box” AI causes distrust and can hide unfairness.

- Explainability: It should be easy to explain why AI picked a candidate or gave a certain performance score. This helps HR understand and trust the technology.

The difference between regular AI and ethical AI lies in these principles. Many AI tools work purely to speed up hiring or reviews, but ignore whether their choices are just. That can lead to problems like biased resume screening, where good candidates are unfairly rejected, or performance scores that favour certain groups.

When ethical principles are ignored, businesses risk damaging their reputation, losing trust among employees and candidates, and even facing legal troubles. This is why ethical AI in HR is necessary in helping companies make better, fairer decisions and build stronger teams.

How to Build or Choose Ethical AI for HR Workflows

When HR teams bring AI tools into their processes, the first step is asking the right questions. Not all AI is built the same, and it pays to be careful. Here are the key points every HR leader should check before trusting any AI system.

1. Ask about explainability.

Can the AI-powered hr platform clearly show how decisions are made? Explainability means you should be able to understand why a candidate was ranked a certain way or why a performance score was given. Without this clarity, it’s hard to trust the results or spot mistakes.

2. Find out if the tool keeps audit trails.

This means the system records every decision and action it takes. With audit trails, you can go back and review how the AI arrived at its conclusions. This helps with accountability and is especially important if you face questions from regulators or candidates.

3. Check the diversity of training data.

AI learns from past data, so if the training dataset lacks variety in gender, ethnicity, or background, the AI will carry those biases forward. Ethical AI must be trained on data that fairly reflects different groups to avoid repeating old patterns of discrimination.

4. Remember, humans still play a key role.

AI is a helper, not a judge. HR teams must review automated decisions and keep final control. This human check prevents errors and adds a layer of fairness that no machine can replace.

Choosing or building ethical AI in HR requires attention to these details. When done right, it supports fair hiring, boosts trust, and reduces risks.

Applying Ethical AI to Hiring, Screening, and Performance

Putting ethical AI in HR to work means turning ideas into everyday actions. Here’s what ethical AI looks like when it truly respects fairness and avoids bias.

First, AI screeners should treat all candidates equally without favouring prestigious schools or specific names. For example, the system won’t give an extra edge to someone just because they attended an Ivy League university. Instead, it focuses on relevant skills and experience.

Second, interview bots need to ask the same role-based questions to every candidate. This consistency helps remove any chance of personal bias creeping in during the interview process. When all candidates face the same criteria, HR teams get clear, comparable answers.

Third, performance scores must reflect the full picture. Ethical AI adjusts for context like team size or resources available. If someone leads a small team with limited tools, their performance score shouldn’t be unfairly low compared to a manager with bigger support. This gives a more honest view of contributions.

HR teams can also make small changes right now to improve fairness. One step is standardising job descriptions and interview questions. Another is regularly reviewing AI decisions for unexpected patterns that suggest bias.

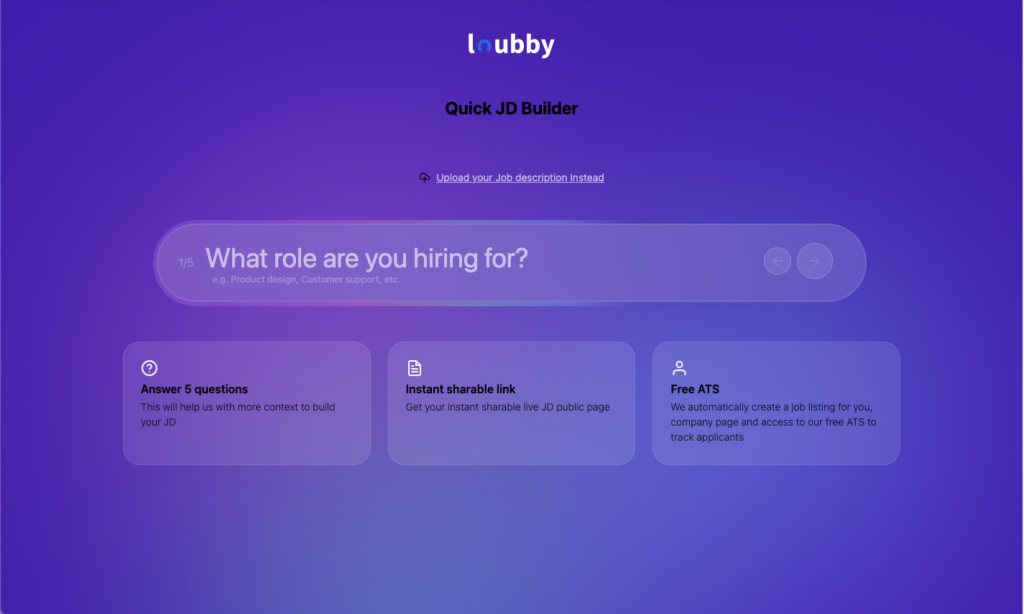

Tools like Loubby’s AI Interviewer and assessment engine are built with fairness at the core. They balance data-driven automation with human insight to keep hiring and reviews equitable. These tools make it easier for HR to apply ethical AI without losing speed or accuracy.

Compliance and Reputation Risks of Ignoring Ethics in AI

Ignoring ethics in AI can lead to serious problems for any company. Laws and regulations are already in place, and more are coming to hold businesses accountable for how they use AI in hiring and employee management. For instance, the EU AI Act sets strict rules on AI fairness and transparency. In the US, EEOC guidelines focus on preventing discrimination in recruitment and evaluations. Local labor laws also require companies to treat workers fairly, which extends to how AI tools are used.

Failing to follow these rules can damage a company’s reputation. Job seekers today research employers carefully and share their experiences online. If a company gains a reputation for biased or unfair treatment, it can lose the trust of potential candidates. This hurts employer branding and makes it harder to attract top talent.

Inside the company, unfairness can lower employee morale. When workers feel the system is unfair or biased, they become disengaged. This can affect productivity and increase turnover. Worse, companies may face costly lawsuits if AI tools lead to discrimination claims. These legal battles drain resources and distract leadership from growing the business.

Using ethical AI in HR is about protecting your company’s good name and creating a workplace where people feel valued.

Conclusion

Ethical AI in HR is a responsibility that every company must take seriously. Building a fair, inclusive, and compliant hiring system requires the right mindset and tools that prioritize transparency and equality.

When you commit to ethical AI, you create an environment where candidates are judged by their skills and potential, not by hidden prejudices in algorithms. This approach not only protects your company from legal risks but also strengthens your reputation and helps you attract the best talent.

If you want to hire smarter, faster, and with fairness at the core, book a demo with Loubby AI and experience how ethical automation can transform your hiring process. See firsthand how technology and fairness can work hand in hand to build stronger, more diverse teams.