Reducing hiring bias with AI has become essential for companies that want to hire the best talent fairly. When algorithms replace human judgment in early screening, they can either eliminate discrimination or amplify it, depending on how you design them.

Research from the National Bureau of Economic Research confirms what many people already suspect. Applicants with white-sounding names are 50% more likely to get callbacks than those with Black-sounding names. This happens even when resumes are identical in every other way.

Hiring bias doesn’t just hurt job seekers. It costs companies real money and top talent. When you filter people based on factors unrelated to job performance, you miss skilled professionals who could move your business forward. That’s why reducing hiring bias with AI tools has become a strategic priority for forward-thinking organizations.

AI promises to fix this problem. But here’s the reality: AI can reduce bias or make it worse, depending on how you use it. This guide shows you the practices that actually work and helps you avoid mistakes that create new problems.

Why Hiring Bias Persists (and What It Costs Your Company)

Bias shows up at every stage of hiring. First, recruiters screen resumes in seconds, often making snap judgments based on names, schools, or employment gaps. Then, interviews favor candidates who remind interviewers of themselves or fit certain stereotypes. Even job descriptions contain language that discourages qualified people from applying.

The numbers tell a clear story. According to the same NBER study, a candidate named “Jamal” needed to send 50% more resumes than a candidate named “Greg” to receive the same number of callbacks. That’s not about skills. That’s bias working quietly in the background.

Moreover, 31% of candidates report experiencing unconscious bias during hiring, according to industry surveys. Gender bias, age bias, and educational bias all limit your talent pool and create workplaces that lack diverse perspectives.

The business costs add up fast. Companies with low diversity often see higher turnover rates. Teams without varied viewpoints miss opportunities and make worse decisions. Plus, there’s the legal risk. Discrimination lawsuits are expensive and damage reputations permanently.

Here’s the thing: most hiring bias isn’t intentional. People don’t wake up wanting to be unfair. But unconscious bias exists in all of us. It influences decisions before we even realize we’re making them. That’s where AI recruitment tools can help if you implement them correctly.

The AI Bias Paradox: How Technology Can Help or Hurt

AI sounds like the perfect solution to bias. After all, machines don’t have feelings or prejudices, right?

Not exactly. AI learns from data. If that data reflects human bias, the AI will too.

A University of Washington study found something striking. AI systems preferred white-associated names 85% of the time compared to Black-associated names at just 9%. The AI wasn’t programmed to discriminate. Instead, it learned patterns from historical hiring data that already contained bias.

This happens when companies train AI on past decisions. For instance, if your company historically hired more men than women for technical roles, the AI notices that pattern. As a result, it starts flagging male candidates as better fits, not because they’re more qualified, but because the data suggested that’s who gets hired.

So does this mean AI makes bias worse? Not necessarily. When designed and monitored properly, AI can actually reduce bias better than humans alone. Here’s why: AI can be audited, adjusted, and held to consistent standards. Humans are harder to correct because we often don’t recognize our own biases.

The key is understanding that AI is a tool. Like any tool, it works well or poorly depending on how you use it. The practices below show you how to use AI to reduce hiring bias rather than amplify it.

Best Practice 1: Start with Diverse, Representative Training Data

Your AI is only as fair as the data you feed it. If you train an AI hiring system on resumes from the past ten years and those resumes heavily favor one demographic, your AI will favor that demographic going forward.

Start by auditing your training data. Look at the candidates your system will learn from. Do they represent the diversity you want in your company? If not, you need to adjust before training begins.

One effective approach is to anonymize historical data and focus the AI on skills and outcomes rather than demographics. Another option is to supplement your data with external sources that provide broader representation.

Regular updates matter too. Even if you start with good data, bias can creep in over time. Therefore, your AI should be retrained periodically with fresh, diverse data to prevent old patterns from becoming permanent.

This isn’t a one-time fix. It’s an ongoing commitment to making sure your AI learns from the right examples.

Best Practice 2: Use Structured Interviews to Reduce Hiring Bias with AI

Unstructured interviews are where bias thrives. When interviewers ask whatever comes to mind and judge answers based on gut feeling, personal preferences take over. One candidate gets asked about hobbies. Another gets grilled on technical details. The lack of consistency makes fair comparison impossible.

Structured interviews fix this problem. Every candidate answers the same questions in the same order. Responses are scored using predefined criteria.

AI takes this even further. Modern AI interviewing tools conduct structured, unbiased interviews that analyze responses in real time. Every candidate gets the same questions delivered in the same way. The AI scores answers based on content, not on whether the interviewer liked the candidate’s personality or background.

What’s more, structured interviews have higher predictive validity compared to unstructured interviews. This means they’re better at identifying who will actually succeed in the role. When you combine structure with AI analysis, you remove two layers of bias: inconsistent questions and subjective scoring.

Best Practice 3: Focus on Skills-Based Assessments Over Credentials

Degrees and brand-name companies on resumes create bias. Recruiters see a diploma from a prestigious university and assume the candidate is qualified. They see an employment gap and assume something is wrong. Neither assumption is reliable.

Skills matter more than degrees. What truly matters is whether someone can do the work. Skills-based assessments test that directly.

Instead of filtering by education, give candidates a task that reflects the actual job. For example, if you’re hiring a data analyst, provide a dataset and ask them to find insights. If you need a content writer, ask them to write something. The work speaks for itself.

AI-powered assessment tools can grade these tests objectively. They look at whether the code runs, whether the analysis is accurate, and whether the writing is clear. They don’t care where the candidate went to school or what their name is.

This approach opens your talent pool significantly. You’ll find skilled people who learned through bootcamps, self-study, or work experience rather than traditional education. Many of these candidates bring fresh perspectives and strong problem-solving skills precisely because they took unconventional paths.

By using skills-based hiring approaches, you move away from credential-based filtering and toward merit-based selection. This is one of the most effective ways to reduce hiring bias with AI.

Best Practice 4: Build in Human Oversight for AI Decisions

AI should support human decision-making, not replace it. The most successful bias reduction strategies combine AI efficiency with human judgment.

Here’s why both matter. AI can process hundreds of resumes quickly and consistently. It won’t get tired or distracted. However, AI can’t understand context the way humans can. It might flag a career gap as negative when the candidate was caring for a sick family member or starting a business.

Research shows that organizations that employed human oversight along with AI experienced a 45% reduction in biased decisions. The combination works better than either alone.

Set up a review process where hiring managers can see why the AI made certain recommendations. If the reasoning doesn’t make sense, they should be able to override it. Then, track these overrides. If you see patterns where humans consistently disagree with the AI, that’s a signal that something needs adjustment.

Human oversight also helps you catch new forms of bias that the AI might develop. Technology changes, and so do the ways bias can appear. Keeping humans in the loop means you can spot and fix problems before they become systemic.

Best Practice 5: Conduct Regular Bias Audits on Your AI Systems

Even well-designed AI systems can develop bias over time. That’s why regular audits are non-negotiable. You need to check whether your AI is making fair decisions and adjust when it’s not.

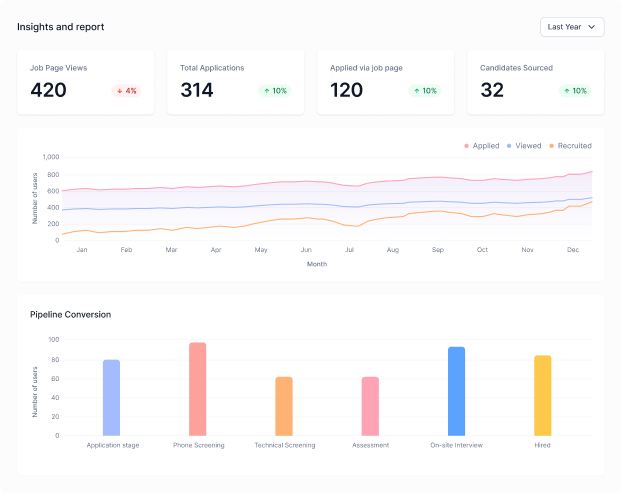

Start by tracking demographic data at each stage of your hiring funnel. How many candidates from different backgrounds make it through the screening? How many get interviews? How many receive offers? If you see significant drop-offs at any stage, investigate why.

Next, look at the AI’s decision patterns. Is it favoring certain schools, neighborhoods, or previous employers? These preferences might seem neutral, but often correlate with demographic factors.

Test your AI with identical resumes that differ only in names or other demographic signals. If the AI treats them differently, you’ve found bias that needs fixing.

Schedule these audits quarterly or at least twice a year. Make someone responsible for conducting them and reporting results. Without accountability, bias audits become something people plan to do but never actually complete.

When you find bias, don’t just note it. Fix it. Retrain your AI, adjust your criteria, or rethink your process. An audit is only useful if it leads to action.

Best Practice 6: Implement Blind Screening Where Appropriate

Blind screening removes identifying information from applications before anyone reviews them. Names, photos, addresses, and schools get hidden. Reviewers see only skills, experience, and work samples.

This approach works especially well in early screening stages. It forces you to evaluate candidates based on what they can do rather than who they are or where they come from.

Some companies worry that removing this information feels impersonal or makes it harder to assess cultural fit. That’s a valid concern. The key is knowing when to use blind screening and when to reveal full information.

Use it for initial resume reviews and skills assessments. Once you’ve narrowed down to qualified candidates, you can reveal identities for interviews and final selection. This way, bias doesn’t eliminate good candidates before they get a fair chance.

AI makes blind screening easier. Systems can automatically strip identifying information and present sanitized applications to reviewers. When the time comes to reveal identities, the AI can restore the full data.

Balance matters here. You want to reduce bias without making candidates feel like you’re erasing their identities. Some people want you to know their background because it’s part of their story. The goal is fairness, not invisibility.

How Modern AI Hiring Tools Are Changing Fair Recruitment

Bias reduction shouldn’t slow down your hiring process. With the right AI hiring tools, you can make decisions faster and fairer at the same time.

Modern platforms address bias at multiple stages. For instance, AI-powered job description builders create postings that attract diverse candidates by removing gendered or exclusionary language. Research shows certain words in job posts discourage women or older workers from applying. These tools catch problematic language and suggest neutral alternatives.

Additionally, AI recruiters source candidates based on skills and experience rather than demographics. They search for people who can do the work, pulling from diverse talent pools without letting bias influence who gets surfaced. This is reducing hiring bias with AI in action algorithms that expand opportunity rather than limit it.

AI interviewers conduct structured interviews that give every candidate the same experience. Responses get analyzed for content and competency, not personality or background. This removes interviewer bias entirely while providing detailed feedback on who’s genuinely qualified.

Skills assessment features test candidates on role-specific abilities. You see what people can actually do, not just what their resume claims. This shifts focus from credentials to capability, opening opportunities for talented people who took non-traditional paths.

Studies indicate that using conversational AI in hiring can lead to significant cost reductions compared to traditional methods. You save money while making better, fairer decisions.

Taking the Next Step Toward Bias-Free Recruitment with AI

Reducing hiring bias with AI isn’t automatic. It takes thoughtful design, regular monitoring, and a commitment to fairness over convenience. But when you get it right, the benefits are clear. You find better candidates faster. You build stronger, more diverse teams. You avoid the legal and reputational risks that come with discriminatory hiring.

Start with the basics. First, audit your training data. Then, implement structured interviews. Next, test for skills instead of filtering by credentials. Build in human oversight and conduct regular bias checks. These practices work whether you’re hiring five people or five hundred.

Remember that AI is a tool, not a magic solution. It amplifies your intentions. If you design systems carelessly, they’ll amplify bias. However, if you design them carefully with fairness in mind, they’ll help you make better decisions than humans can alone.

The future of diversity and inclusion in hiring depends on how we use technology today. By following these best practices for reducing hiring bias with AI, you’re not just improving your recruitment process, you’re building a more equitable workplace for everyone.

Conclusion

Reducing bias with AI isn’t automatic. It takes thoughtful design, regular monitoring, and a commitment to fairness over convenience. But when you get it right, the benefits are clear. You find better candidates faster. You build stronger, more diverse teams. You avoid the legal and reputational risks that come with discriminatory hiring.

Start with the basics. Audit your training data. Implement structured interviews. Test for skills instead of filtering by credentials. Build in human oversight and conduct regular bias checks. These practices work whether you’re hiring five people or five hundred.

Remember that AI is a tool, not a magic solution. It amplifies your intentions. If you design systems carelessly, they’ll amplify bias. If you design them carefully with fairness in mind, they’ll help you make better decisions than humans can alone.

Want to see how Loubby AI can help you reduce bias while speeding up hiring? Book a demo and experience structured interviews, skills-based assessments, and AI-powered matching that puts skills first and bias last.